I would recommend you to build a simple neural network before jumping to CNN. You can visit my this post to build a simple neural network with Keras. You can download my Jupyter notebook containing following code of CNN from here.

Step 1: Import required libraries

from keras.datasets import mnist

from keras.utils import to_categorical

from keras.models import Sequential

from keras.layers import Conv2D, MaxPooling2D, Flatten, Dense, Dropout

from keras.optimizers import Adam

import matplotlib.pyplot as plt

%matplotlib inline

Step 2: Load MNIST dataset from Keras

(X_train, y_train), (X_test, y_test) = mnist.load_data()

Above line will download the MNIST dataset. Now, lets print the shape of the data.

X_train.shape, X_test.shape, y_train.shape, y_test.shape

Output: ((60000, 28, 28), (10000, 28, 28), (60000,), (10000,))

It is clear from the above output that each image in the MNIST dataset has a size of 28 X 28 pixels which means that the shape of x_train is (60000, 28, 28) where 60,000 is the number of samples.

We can visualize the images using matplotlib library. Lets see first image.

plt.imshow(X_train[0], cmap=’gray’)

Step 3: Reshape the dataset

We have to reshape the X_train from 3 dimensions to 4 dimensions as it is a requirement to process through Keras API. We reshape X_train and X_test because our CNN accepts only a four-dimensional vector.

X_train = X_train.reshape(X_train.shape[0], 28, 28, 1)

X_test = X_test.reshape(X_test.shape[0], 28, 28, 1)

The value of “X_train.shape[0]” is 60,000. The value 60,000 represents the number of images in the training data, 28 represents the image size and 1 represents the number of channels.

The number of channels is set to 1 if it is in grayscale image and if the image is in RGB format, the number of channels is set to 3. The last number is 1, which signifies that the images are greascale.

Now, lets again print the shape of the data.

X_train.shape, X_test.shape, y_train.shape, y_test.shape

Output: ((60000, 28, 28, 1), (10000, 28, 28, 1), (60000,), (10000,))

Step 4: Convert the image pixels in the range between 0 and 1

Lets us print first image:

X_train[0]

You will notice that it contains the values ranging from 0 to 255. We need to scale this data between 0 and 1 for accurate results. Ideally, all the inputs to the neural network should be between 0 and 1. So, lets do it.

First convert X_train and X_test to float data type.

X_train = X_train.astype(‘float32’)

X_test = X_test.astype(‘float32’)

Now, divide all the values by 255.

X_train /= 255.0

X_test /= 255.0

Again print the first image:

X_train[0]

You will notice that all the pixels are ranging from 0 to 1 only which is a perfect data for our neural network.

Step 5: Convert labels into categorical variables (one-hot encoding)

Our labels are ranging from 0 to 9. So, we need to one-hot encode these labels so that these turn into 0 and 1.

y_train, y_test

y_train[0]

5

y_train = to_categorical(y_train)

y_test = to_categorical(y_test)

y_train, y_test

y_train[0]

array([0., 0., 0., 0., 0., 1., 0., 0., 0., 0.], dtype=float32)

Please note that how the first image “5” is transformed into one hot encoded format. You can try the same with different images and note their one hot encoded representation.

Step 6: Create a CNN model

model = Sequential()

model.add(Conv2D(32, kernel_size=(5,5), input_shape=(28,28,1), padding=’same’, activation=’relu’))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Conv2D(64, kernel_size=(5,5), padding=’same’, activation=’relu’))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Flatten())

model.add(Dense(1024, activation=’relu’))

model.add(Dropout(0.2))

model.add(Dense(10, activation=’softmax’))

Explanation of above CNN model:

1. We have created a sequential model which is an in-built model in Keras. We just have to add the layers in this model as per our requirements. In this case, we have added 2 convolutional layers, 2 pooling layers, 1 flatten layer, 2 dense layers and 1 dropout layer.

2. We have used 32 filters with size 5X5 each in first convolutional layer and then 64 filters in the second convolutional layer.

3. We are using zero padding in each convolutional layer.

4. After each convolutional layer, we are adding pooling layer with pool size of 2X2.

5. We are using ReLU activation function in all hidden layers and softmax in output layer. To know more about activation functions, please visit my this and this post.

6. We can also specify stride attribute for convolutional and pooling layers. By default it is (1,1).

7. We have flatten layer just before dense layer. Flatten layer converts the 2D matrix data to a 1D vector before building the fully connected layers.

8. After that, we use a fully connected layer with 1024 neurons.

9. Then we use a regularization layer called Dropout. It is configured to randomly exclude 20% of neurons in the layer in order to reduce overfitting. Dropout randomly switches off some neurons in the network which forces the data to find new paths. Therefore, this reduces overfitting. To know more about dropout, please visit this post.

10. We add a dense layers at the end which is used for class prediction (0–9). That is why it has 10 neurons. It is also called output layer. This layer uses softmax activation function instead of ReLU.

Step 7: Model Summary

model.summary()

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_1 (Conv2D) (None, 28, 28, 32) 832

_________________________________________________________________

max_pooling2d_1 (MaxPooling2 (None, 14, 14, 32) 0

_________________________________________________________________

conv2d_2 (Conv2D) (None, 14, 14, 64) 51264

_________________________________________________________________

max_pooling2d_2 (MaxPooling2 (None, 7, 7, 64) 0

_________________________________________________________________

flatten_1 (Flatten) (None, 3136) 0

_________________________________________________________________

dense_1 (Dense) (None, 1024) 3212288

_________________________________________________________________

dropout_1 (Dropout) (None, 1024) 0

_________________________________________________________________

dense_2 (Dense) (None, 10) 10250

=================================================================

Total params: 3,274,634

Trainable params: 3,274,634

Non-trainable params: 0

_________________________________________________________________

Step 8: Compile the model

model.compile(Adam(lr=0.0001), loss=’categorical_crossentropy’, metrics=[‘accuracy’])

We are using Adam optimizer with learning rate of 0.0001 and loss function as categorical cross entropy. You can skip the learning rate parameter to the optimizer and let Keras itself decide the optimal value of the learning rate.

Step 9: Train the model

history_cnn = model.fit(x_train, y_train, validation_data=(x_test, y_test), batch_size=128, epochs=5, verbose=2)

We are using batch size of 128 and 5 epochs.

Train on 60000 samples, validate on 10000 samples

Epoch 1/5

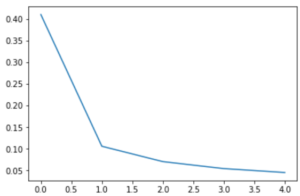

– 252s – loss: 0.4103 – acc: 0.8930 – val_loss: 0.1250 – val_acc: 0.9622

Epoch 2/5

– 253s – loss: 0.1059 – acc: 0.9691 – val_loss: 0.0725 – val_acc: 0.9778

Epoch 3/5

– 276s – loss: 0.0704 – acc: 0.9785 – val_loss: 0.0507 – val_acc: 0.9836

Epoch 4/5

– 310s – loss: 0.0542 – acc: 0.9834 – val_loss: 0.0403 – val_acc: 0.9870

Epoch 5/5

– 371s – loss: 0.0452 – acc: 0.9864 – val_loss: 0.0352 – val_acc: 0.9887

Step 10: Print plots

Validation Accuracy Plot

Step 11: Evaluate the model

score = model.evaluate(x_test, y_test, verbose=0)

print(score)

print(‘Loss:’, score[0])

print(‘Accuracy:’, score[1])

[0.03515956207765266, 0.9887]

Loss: 0.03515956207765266

Accuracy: 0.9887

I got the accuracy score of about 98.87%. You can play around with different hyperparameters like learning rate, batch size, number of epochs, adding more convolutional and pooling layers, changing the number and size of filters, changing the size of strides etc.

Terimakasih telah membaca di Piool.com, semoga bermanfaat dan lihat juga di situs berkualitas dan paling populer Aopok.com, peluang bisnis online Topbisnisonline.com dan join di komunitas Topoin.com.